Hey folks! ![]()

Just wanted to share some highlights from yesterday’s community webinar with Derek Manning from Superna. If you missed it, don’t worry - you can watch it below on YouTube, but here are the key points that really stood out to me.

The Reality Check: AI Security is a Data Problem

Derek started with something that really hit home - 99% of Fortune 500 companies now rely on data-driven intelligence to stay competitive. It’s not just an advantage anymore; it’s table stakes. But here’s the kicker: most organizations are focusing on the flashy AI/ML parts while leaving their data foundations vulnerable.

The webinar walked through what Derek calls the “data-driven intelligence workflow”:

- Clean → Remove PII, ensure compliance

- Pool → Centralize data (hello, VAST Data customers!)

- Integrate → Merge with external sources

- Enrich → Refine and enhance datasets

- Analyze → Extract insights and train models

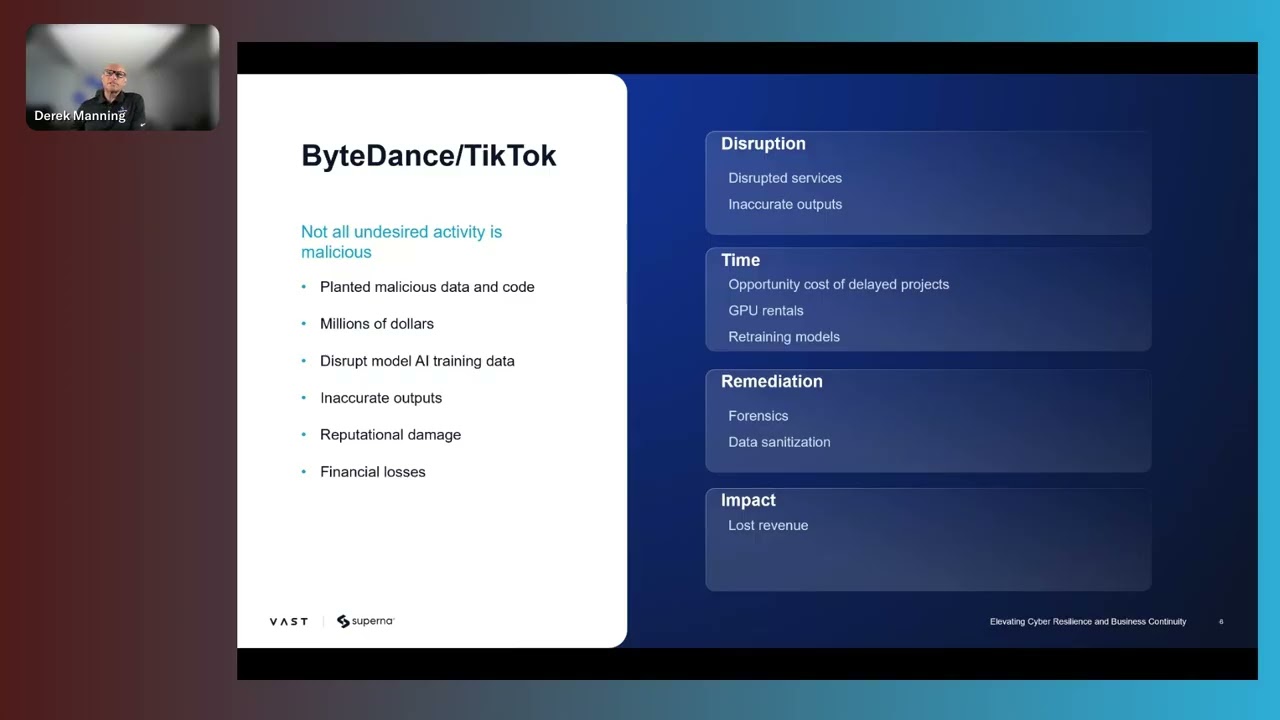

The ByteDance Wake-Up Call

One story that really drove the point home was the ByteDance incident from October 2024. An intern allegedly planted malicious data in their AI training pool, potentially causing $10M+ in damage. While ByteDance said the figures were exaggerated, it shows how vulnerable these workflows can be - and that’s just what we know about publicly!

Enter “Cyberstorage” - Gartner’s New Category

This was news to me: Gartner has created an entirely new security category called “cyberstorage”. Traditional security stops at the network/endpoint level, but attackers go straight for the storage once they’re in. Makes total sense when you think about it.

How Superna + VAST Work Together

The integration is pretty darn neat:

- Real-time audit stream monitoring via integrations with VMS

- API-based relationship with VAST for automated snapshots and user lockouts

- User behavioral analysis to detect abnormal patterns

- Compelling event-based snapshots (not just scheduled ones)

- Zero-trust API integration with security tools like ServiceNow

What I found really cool was the “honeypot tripwire” approach - creating non-production shares that trigger alerts if accessed. Simple but very effective.

The “Left of the Boom” Philosophy

Derek explained ransomware attacks using a timeline with a “boom” in the middle. Traditional detection often happens on the right side (users calling about encrypted files, pure nightmare fuel), but Superna focuses on the left side - catching attacks before and as they happen, not after the damage is done.

Their response is automated:

- Detect abnormal behavior

- Create compelling event snapshot

- Lock out suspicious user

- Alert security teams via webhooks

- Surgical recovery of affected files

Key points from the Q&A

- Data cleaning challenges: PII removal is the biggest hurdle for AI compliance

- Tools: There are many tools for identifying and cleaning data, everything from license plate/credit card detection to medical image depersonalization

- Data selection: When looking at what data to include in AI training, try to include as much as possible - emails, CRM data, historical reports. The “unknown unknowns” in your data might be the most valuable

Bottom Line

If you’re running AI workloads on VAST, this strategic partnership makes a lot of sense. The security landscape is changing fast, and having data-layer protection that works natively with your storage platform seems like a no-brainer.

Thoughts on the cyberstorage concept? Would love to hear how others are thinking about securing their AI pipelines.